Primary Case Study: OG-015

Technology Portfolio Management

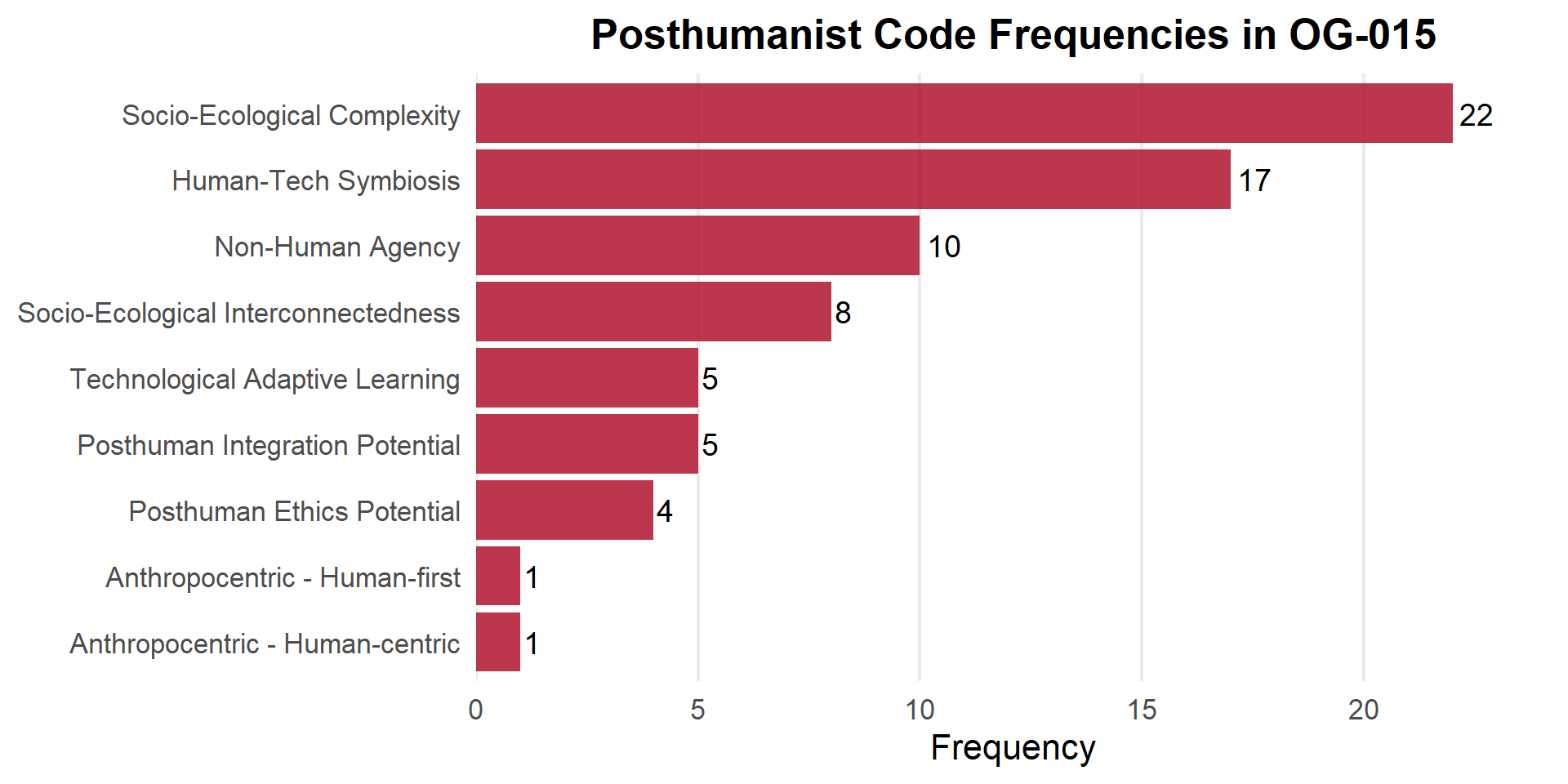

73 coded instances

9 posthuman categories

Semantic Technologies for Cybersecurity Education Competencies

JSON-LD Implementation of Distributed Learning Analytics

2025-11-05

Current Ed Tech

Individual Humans + Passive AI Tools

Reality

Human ↔︎ AI Assemblages

89.4%

of education-focused NICE Framework work role competencies contain posthuman elements

How do we track/assess/teach this?

Decentering the human

Agency is distributed

Technology mediates, not just supports

A methodological framework

Not a complete framework analysis

Theory → JSON-LD → SPARQL

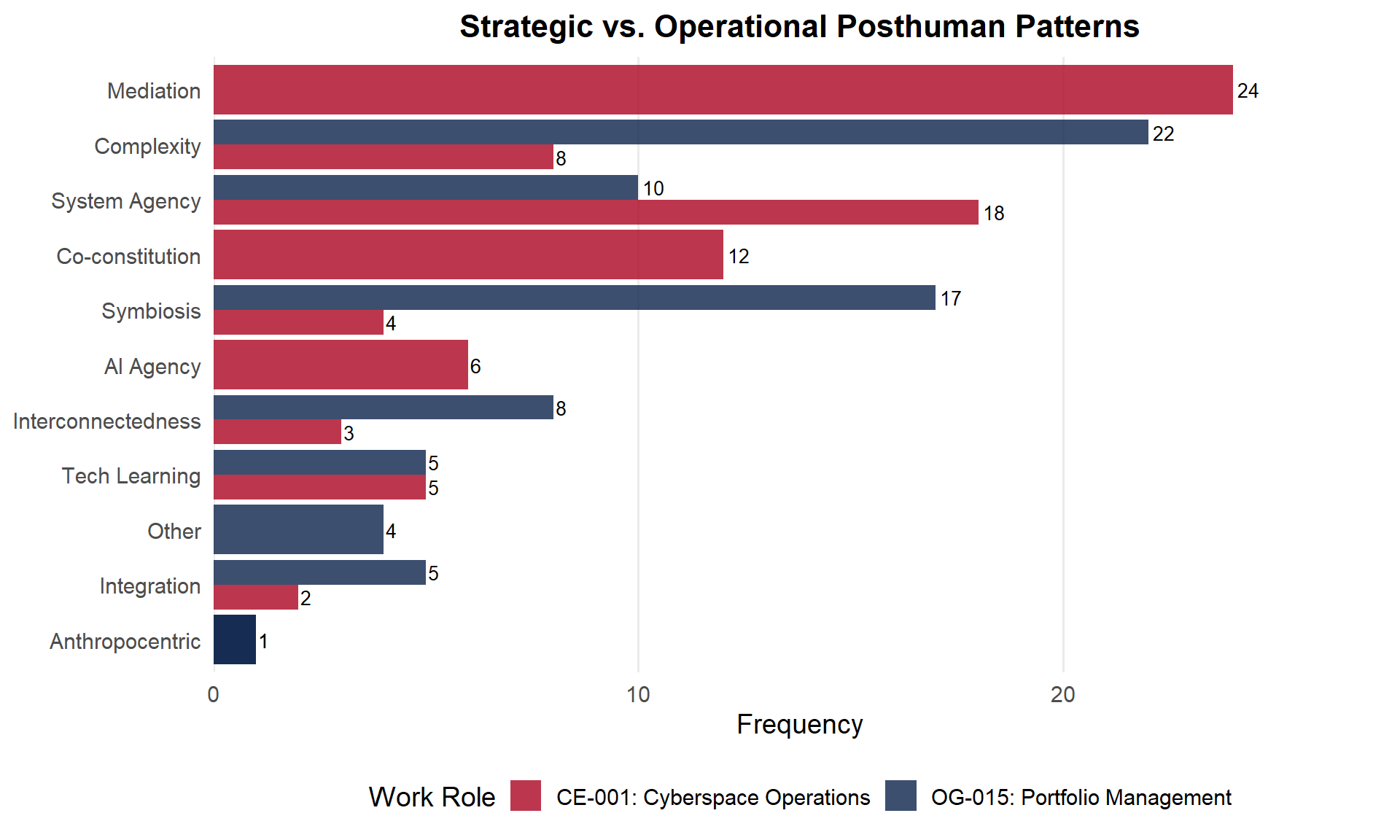

Demonstrated through OG-015, with preliminary CE-001 extension

Posthuman Theory → JSON-LD Schema → Queryable Competencies

JSON-LD Structure:

Context → Analysis → Code Frequencies

SE-C: 22 | HTE-S: 17 | NHA-S: 10

Technology Portfolio Management

73 coded instances

9 posthuman categories

PREFIX posthuman: <https://posthuman.education/ontology#>

PREFIX nice: <https://nice.nist.gov/framework/terms#>

SELECT ?workRole ?name ?entanglementType

WHERE {

?workRole a nice:WorkRole ;

schema:name ?name ;

posthuman:posthumanAnalysis ?analysis .

?analysis posthuman:primaryCategories ?category .

?category a posthuman:HumanTechnologyEntanglement ;

posthuman:subtype ?entanglementType .

}

ORDER BY ?entanglementTypeStandard semantic web - works with any triplestore

Before

Track: Individual performance

Now

Track: Human-AI collaboration

What we can now measure

Systematic competency enhancement

Evidence-based curriculum design

Distributed Agency

Technological Mediation

Adaptive Collaboration

Now assessable, not just theoretical

Backward Compatible + Posthuman Enhanced

Incremental adoption, not replacement

Healthcare • Climate Science • Engineering

Anywhere human-AI collaboration is fundamental

Reproducible methodology

✓ JSON-LD validated

✓ SPARQL queries work

✓ Standards compatible

Completed: OG-004/005 (JCERP) + OG-015 + CE-001 (ISCAP)

Next Phase: 52-role systematic analysis

Then: Public framework release + Implementation guides

Fall 2026 target for complete framework

1. Posthuman theory → Practical tech

2. Assess what actually matters

3. Enhance existing systems

ryanstraight@arizona.edu

ORCID: 0000-0002-6251-5662

escamillaa@arizona.edu

ISCAP 2025 | Straight & Escamilla | November 5, 2025