My ISCAP 2025 talk didn’t go exactly to plan. Time was tighter than expected, and I had to cut the talk short right as I was getting to the methodology, which was the part where we demonstrate how to translate posthumanist theory into working code that educational systems can actually use to recognize human-AI collaboration patterns. The feedback was immediate though: “I want to hear the rest of this.”

I wanted to share the rest, believe me! So, here’s basically the talk I was going to give, with all the methodology, data visualizations, SPARQL queries, and practical applications that make this work.

The Problem We’re Trying to Solve

Learning management systems can tell you if students finished the module, watched the video, took the quiz. But it has absolutely no idea whether they can actually work with AI.

And that’s increasingly what professional work looks like: humans and AI systems collaborating in ways that create capabilities neither could achieve independently, not humans using AI as a passive tool, waiting for commands. This is especially true in cybersecurity, where analysts work alongside automated detection systems, threat intelligence platforms, and AI-powered forensics tools in complex assemblages that define contemporary practice.

My graduate thesis advisee, Aaron Escamilla, and I have been working on something that addresses this gap: a way to make educational technologies actually recognize and measure human-AI collaboration through semantic web technologies that preserve theoretical sophistication while enabling computational analysis.

What We Measure vs What Matters

Think about how a cybersecurity analyst actually works today: they’re not sitting alone reading threat reports and making solo decisions, rather they’re working through and with automated systems in ways where the distinction between “what the human detected” and “what the system detected” becomes meaningless because the detection emerges from the assemblage itself.

The National Initiative for Cybersecurity Education (NICE) Framework describes what cybersecurity professionals need to know and be able to do. In our pilot study analyzing education-focused roles (OG-004: Cybersecurity Curriculum Development and OG-005: Cybersecurity Instruction), we found something striking: 89.4% of the competency statements contained evidence of human-AI collaboration patterns.

That’s great news, right? Distributed decision-making, technological mediation of perception, adaptive learning across human and technological systems, these aren’t fringe concepts from critical theory any longer. We see them embedded in the national workforce standards, right there in the competencies we’re supposed to be teaching. Yet, where are the educational technology tools that can track, assess, or teach these collaborative competencies?

Your LMS can tell you a student completed a cybersecurity module. It can’t tell you if that student can effectively collaborate with AI security tools, recognize when algorithms are mediating their threat perception, or coordinate action across human-technology networks. We’re using 20th century educational technology to prepare students for 21st century professional practice where human-AI collaboration defines competence, not completion rates.

Building a New Representation System

Instead of trying to force these collaborative competencies into existing educational technology categories (which inevitably fails because the categories assume human-centric models) we built a new representation system using JSON-LD (JavaScript Object Notation for Linked Data).

I’ve described it in the past as nutrition labels for human-AI collaboration patterns. Just like nutrition labels use standardized categories (calories, protein, fat) to make you feel guilty different foods comparable, our system uses standardized categories (symbiosis, augmentation, distributed agency, and others) drawn from posthumanist theory to make different professional competencies comparable and computationally queryable.

Here’s what it looks like in practice:

{

"@context": {

"posthuman": "https://posthuman.education/ontology#",

"nice": "https://nice.nist.gov/framework/terms#"

},

"posthumanAnalysis": {

"codeFrequency": {

"posthuman:SE-C": 22, // System Complexity

"posthuman:HTE-S": 17, // Human-Tech Symbiosis

"posthuman:NHA-S": 10 // System Agency

}

}

}Those codes represent specific patterns of human-AI collaboration that we identified through systematic qualitative analysis of the NICE Framework, grounded in posthumanist theoretical perspectives that recognize both humans and technologies as active participants in professional practice: machine-readable, queryable, and actionable.

What This Actually Enables

Once you have these patterns in machine-readable format, we have some interesting opportunities.

A strategic portfolio management role needs fundamentally different human-AI collaboration skills than a tactical cyberspace operations role, and now we can identify, measure, and teach these differences systematically across the entire framework through computational queries rather than manual reading and hoping we caught everything important. Run a query like “show me competencies with high complexity recognition but low technological adaptation codes” and you’ve just found a curriculum gap where competencies acknowledge complex systems but don’t address how those systems learn and evolve—an evidence-based curriculum enhancement opportunity that you’d probably never have found through manual analysis.

Suddenly, we can design assessments that evaluate whether students can effectively coordinate with AI systems, recognize technological mediation, adapt their strategies based on AI capabilities—the collaborative competencies that actually define contemporary professional practice, not the checkbox exercises we’ve been settling for because that’s all our technology could handle.

Traditional learning analytics asks “Did the student complete the module?” while posthuman learning analytics asks “Can the student effectively collaborate with AI security tools in this operational context?” That’s the difference between compliance tracking and competence assessment.

We’ve intentionally designed this approach so isn’t limited to cybersecurity, either. Anywhere human-AI collaboration is becoming fundamental to professional practice–we immediately thought of healthcare diagnostics, climate modeling, and engineering design–this same methodology applies. The framework is domain-agnostic.

The Three-Stage Translation Process

The methodology that makes this work involves three carefully designed stages that preserve theoretical sophistication while enabling computational processing:

Stage 1: Systematic Qualitative Coding

We applied the previously developed posthumanist framework to competency statements in selected NICE Framework work roles, covering all tasks, knowledge statements, and skills. The framework includes six main categories with fifteen subcodes tracking patterns like:

- Human-Technology Entanglement (HTE): How human and technological capabilities interweave (Symbiosis, Mediation, Co-constitution, Augmentation)

- Non-Human Agency (NHA): Where systems exercise autonomous judgment (AI, System, Emergent behaviors)

- Adaptive Learning (AL): System, technological, and human adaptation patterns

- Socio-Ecological Awareness (SE): Recognition of complexity and interconnectedness

- Posthuman Potential (PP): Emergent, integrative, and reconceptualizing elements

- Anthropocentric Elements (AE): Human-centric assumptions that resist distributed perspectives

This is rigorous qualitative analysis grounded in posthumanist theory, applying consistent coding criteria across the entire corpus with intercoder reliability checks and theoretical validation, not just tagging.

Stage 2: Code-to-Property Mapping

Each qualitative code becomes a first-class JSON-LD entity with defined relationships to Schema.org vocabularies. This translation step preserves theoretical sophistication while enabling machine processing.

For example, HTE-S (Human-Technology Entanglement - Symbiosis) maps to a defined type in our posthuman ontology with relationship patterns linking to NICE Framework competency URIs, co-occurrence tracking with other codes, and frequency counts that remain theoretically interpretable. The complete translation schema preserves philosophical nuance. We’re not dumbing down the theory to fit the technology, but instead creating new ontological vocabulary that maintains theoretical rigor while enabling computational analysis.

Stage 3: Semantic Validation

The final step ensures theoretical relationships remain computationally tractable through SPARQL queries that can identify co-occurrence patterns between complexity recognition and distributed agency, mediation without co-constitution, strategic versus operational role differences—all the relationships that matter theoretically must be queryable computationally.

The complete translation schema and code mappings are detailed in our published JCERP paper. We’re creating new ontological vocabulary that preserves philosophical sophistication while enabling machine processing—the theoretical relationships that matter in posthumanist analysis remain intact and queryable in the computational representation.

What the Data Reveals

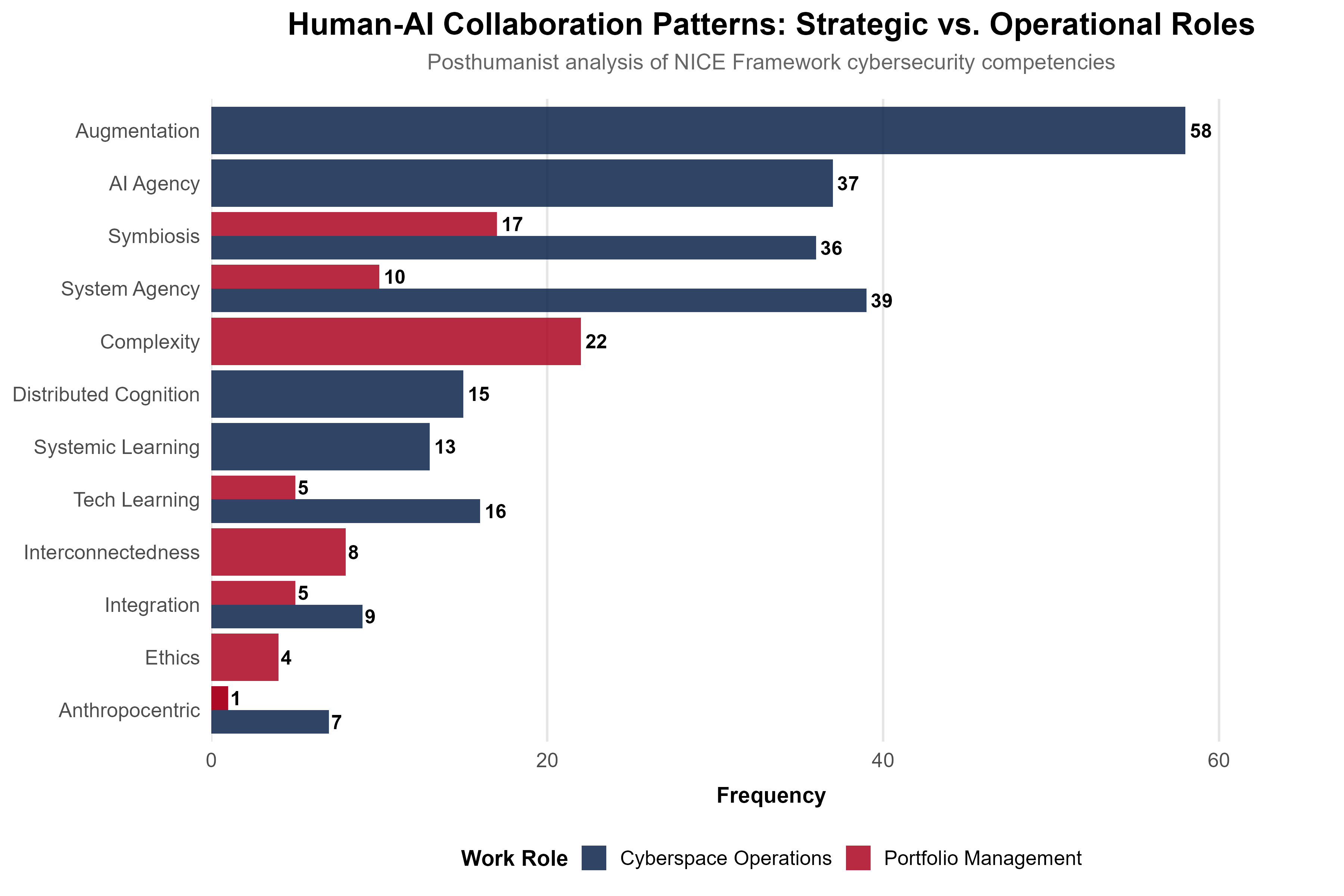

Strategic Roles: OG-015 Technology Portfolio Management

We analyzed OG-015 (Technology Portfolio Management) as our primary case study, which is a strategic role involving high-level decisions about technology investments, risk management, and policy development.

73 coded instances across 9 categories revealed a distinct pattern:

- 22 instances of Systems Emergence - Complexity (SE-C): Portfolio management inherently involves recognizing complex, adaptive sociotechnical systems

- 17 instances of Human-Technology Entanglement - Symbiosis (HTE-S): Strategic decisions require symbiotic collaboration between human judgment and algorithmic analysis

- 10 instances of Non-Human Agency - System (NHA-S): Automated systems exercise judgment in risk assessment, trend analysis, threat modeling

Strategic roles show high complexity recognition paired with symbiotic relationships where humans and technology work together, but maintain distinct contributions where human strategic thinking combines with algorithmic pattern recognition to create portfolio decisions that neither could make independently.

Operational Roles: CE-001 Cyberspace Operations

Following the ISCAP paper acceptance, we conducted preliminary analysis of CE-001 (Cyberspace Operations) for this talk to test whether the methodology reveals role-specific patterns. The results were dramatic and really quite fascinating.

82 coded instances across 36 task statements revealed fundamentally different patterns:

- 24 instances of Human-Technology Entanglement - Mediation (HTE-M): Operational cybersecurity work is fundamentally mediated—you literally cannot perceive cyber terrain without technological instrumentation

- 24 instances of Non-Human Agency (NHA): Systems exercise autonomous judgment across multiple forms—18 instances of System Agency (NHA-S) and 6 instances of AI Agency (NHA-AI) in threat detection, alert generation, and pattern recognition

- 12 instances of Human-Technology Entanglement - Co-constitution (HTE-C): Detection, analysis, and response emerge from assemblages where you can’t separate human from technological contributions

- 0 instances of Anthropocentric Elements (AE): Unlike strategic roles where human-centric approaches remain possible (though suboptimal), operational roles demand assemblages—temporal and epistemic conditions make human-centric approaches impossible

The same methodology applied to different role types reveals fundamentally different human-AI collaboration requirements. Strategic roles emphasize complexity recognition with symbiotic collaboration (distinct roles working together), while operational roles require technological mediation and co-constitution (inseparable entanglement). This makes sense, though! And it provides actionable guidance for curriculum design.

Making It Computationally Queryable

Once competencies are represented in JSON-LD with preserved posthumanist relationships, you can run queries that were previously impossible. Here’s a SPARQL query from our framework:

PREFIX posthuman: <https://posthuman.education/ontology#>

PREFIX nice: <https://nice.nist.gov/framework/terms#>

SELECT ?competency ?description

WHERE {

?competency a nice:Competency ;

posthuman:hasCode posthuman:HTE-M ;

nice:description ?description .

FILTER NOT EXISTS {

?competency posthuman:hasCode posthuman:HTE-C .

}

}This query asks: “Show me competencies with high technological mediation (HTE-M) but without co-constitution (HTE-C).” These are competencies where technology shapes perception and decision-making, but human and technological contributions remain theoretically separable. A curriculum design opportunity, for example, where you might want to enhance these competencies with co-constitution elements, or keep them distinct for pedagogical reasons.

The power comes from systematic analysis at scale. Queries like “Show me competencies requiring distributed agency with high complexity recognition but low adaptation codes” identify gaps where curriculum acknowledges complex systems but doesn’t address system evolution. “Find competencies with symbiotic human-AI collaboration across strategic roles” enables curriculum coherence checks. “Identify competencies where AI agency appears without corresponding human oversight codes” reveals safety and ethics curriculum opportunities.

These queries execute in milliseconds across the entire framework. The alternative of manually reading and hoping you catch everything doesn’t scale, and introduces systematic bias based on what patterns researchers happen to notice.

Practical Applications for Education

Transforming Learning Analytics

Traditional learning analytics tells you a student completed the cybersecurity module, what their quiz score was, how many times they watched the video. These are compliance metrics that say often tell you nothing of their actual competence.

Posthuman learning analytics asks whether the student can effectively coordinate security responses across human analysts and automated detection systems, recognize when SIEM algorithms are mediating their threat perception, adapt collaboration strategies based on AI system capabilities, and so on. These are the differences between tracking completion and assessing competence for contemporary professional practice.

Evidence-Based Curriculum Enhancement

With access to this full framework, you could run computational queries to identify curriculum gaps systematically. When you find competencies with complexity recognition but no technological adaptation codes, you’ve identified where curriculum acknowledges complex adaptive systems but doesn’t address how those systems learn and evolve.

Assessment Possibilities

We can now assess concepts that were previously just theoretical constructs:

- Distributed Agency: Performance tasks requiring students to coordinate across human-AI networks, with computational evaluation of coordination effectiveness

- Technological Mediation: Assessments where students must recognize and articulate how tools shape their perception (e.g., “How does this SIEM’s alert prioritization algorithm mediate your threat assessment?”)

- Adaptive Collaboration: Scenarios where students must adjust strategies based on AI system capabilities and limitations

These become measurable learning outcomes through computational representations in the semantic framework, not philosophical abstractions.

Integration Without Replacement

This doesn’t require replacing your existing educational technology infrastructure. JSON-LD schemas integrate with established educational metadata standards (Schema.org, IEEE Learning Object Metadata, IMS standards), allowing institutions to maintain current LMS platforms, learning analytics systems, and assessment infrastructure while adding posthumanist capabilities as enhanced metadata layers.

What does this mean, exactly? Standard properties continue working (completion tracking, grade recording) while posthumanist properties add capabilities for collaboration assessment, mediation recognition, and distributed agency coordination. Systems that don’t understand the enhanced metadata simply ignore it, making it backward compatible by design for incremental adoption.

Beyond Cybersecurity

While we’ve validated this through cybersecurity education and this is our main concern, there is no reason that the methodology would not generalize to any field where human-AI collaboration characterizes professional practice. Healthcare professionals working with diagnostic AI systems, radiologists collaborating with image analysis algorithms, climate scientists creating insights through human interpretation and computational simulation that neither could generate independently, engineers using CAD and simulation tools that actively shape design thinking—anywhere professional practice involves genuine human-AI collaboration rather than humans using passive tools, this methodological approach applies. Expanding to further domains is a logical next step.

Current Scope and Future Development

Where We Are Now

We’ve completed detailed analysis of four work roles from the NICE Framework (~8% coverage):

- OG-004 and OG-005 (education-focused roles) published in JCERP 2024

- OG-015 (strategic role) presented at ISCAP 2025

- CE-001 (operational role) preliminary post-publication extension

This represents methodological proof of concept. We are moving toward the ability to translate sophisticated posthumanist qualitative analysis into machine-readable semantic web formats while preserving theoretical nuance, and the methodology reveals patterns that matter for curriculum design.

Honest Limitations

I also want to be completely transparent about what we haven’t done yet. We have no generalizable educational outcomes data, as this hasn’t been deployed in actual courses with learner outcome measurement. There’s also no pedagogical effectiveness evidence yet, as we’re contributing methodology and infrastructure at this point, and not proof of improved learning (though this is certainly on the roadmap). Likewise, this isn’t “classroom-ready” for immediate adoption yet. It requires additional validation phases before production deployment. And we’ve demonstrated the approach through detailed case studies, not complete systematic analysis across all 52 NICE Framework roles.

Should you adopt this RIGHT NOW expecting proven educational outcomes? No. Does this enable outcomes research that was previously impossible? Absolutely, and that’s what we find truly exciting. We’re building the measurement infrastructure necessary for that research.

Timeline for Open Release

The next phase involves systematic posthumanist coding across all NICE Framework work roles and competency areas, targeting Fall 2026 for public release. The release will include the complete JSON-LD framework with all work roles, a SPARQL query library with documented examples, implementation guides for educational technology developers, and curriculum design resources for cybersecurity education programs. We’re planning on making this all openly available, because measuring what actually matters in contemporary professional practice shouldn’t be a competitive advantage, but a baseline capability. The rising tide lifts all boats, and all that.

Next Steps

This is part of my broader research program I informally call PHASE (Posthuman Approaches to Security Education) that explores how posthumanist theory can transform cybersecurity education to better prepare students for contemporary professional practice where human-AI collaboration defines competence.

If you’re interested in this work, have questions about implementation, or want to explore collaboration opportunities, please do get in contact!

- Email: ryanstraight@arizona.edu

- ORCID: 0000-0002-6251-5662

References

Reuse

Citation

@inproceedings{straight2025,

author = {Straight, Ryan},

title = {Teaching {Machines} to {Understand} {How} {Humans} and {AI}

{Actually} {Work} {Together}},

date = {2025-11-05},

url = {https://ryanstraight.com/research/iscap-2025-posthuman-json-ld/},

langid = {en}

}